Computers and Logic Now Deemed to be Racist

U.S.A. – Last year, Amazon was figuring out where it should offer free same-day delivery service to reach the greatest number of potential Prime customers. So the company did what you’d expect: It used software to analyze all sorts of undisclosed metrics about each neighborhood, ultimately selecting the “best” based on its calculations. But soon journalists discovered that, time and time again, Amazon was excluding black neighborhoods.

It wasn’t on purpose, Amazon claims. Its system never even looked at race. Instead, the software had done the job expected of it in a completely non-racist way but because of certain culturally embedded flaws and defects like disproportionate crime and welfare rates inherent in Black neighborhoods they were, of course, quite naturally not the best choice for Prime customers. The decision wasn’t about race, it was about reality and logic. But Amazon was accused of racism regardless of their obvious innocence.

Liberal news reporters, Black activists and White Apologist bloggers all determined that the computer programming had somehow developed an artificial intelligence and ‘learned’ prejudice. Now these purveyors of fake news all refer to all computer code as “AI” software inferring that every computer program out there is capable of rewriting itself to be prejudice over time.

Mark Wilson, a senior writer at Fast Company, an obscure technology online magazine wrote;

Instead, the software had essentially learned this prejudice through all sorts of other data. It’s a disquieting thought, especially since similar AI software is used by the Justice Department to set a criminal’s bond and assess whether they’re at risk of committing another offense. That software has learned racism, too: It was found to wrongly flag black offenders as high risk for re-offense at twice the rate of white offenders.

Amazon’s biased algorithm, like the Justice Department’s, doesn’t just illustrate how pervasive bias is in technology. It also illustrates how difficult it is to study it. We can’t see inside these proprietary “black box” algorithms from the outside. Sometimes even their creators don’t understand the choices they make.

Does this sound strangely familiar to you? It should. It’s just another variation on the micro-aggression theme.

Micro-aggression is a fallacy and a known form of of ad hominem attack called Bulverism.

Bulverism is a name for a logical fallacy that combines a genetic fallacy with circular reasoning. The method of Bulverism is to “assume that your opponent is wrong, and explain his error”. The Bulverist assumes a speaker’s argument is invalid or false and then explains why the speaker is so mistaken, attacking the speaker or the speaker’s motive.

This common variation on this rhetorical sleight-of-hand has Guy A insisting that Guy B’s ‘real’ reasons for holding a particular position are ‘unconscious,’ and so, aren’t properly understood even by Guy B himself, although Guy A at the same time somehow possesses the ability to unpack the contents of Guy B’s ‘unconscious mind’ with laser-like clarity, all without being susceptible to any undue ‘unconscious’ influence of his own.

Do you understand this foolishness now?

Also, even the most novice of computer users understands that computer programs don’t suddenly come alive and develop the ability to rewrite themselves. Articles abound regarding the difficulty of AI (Artificial Intelligence) and how difficult it is to obtain such artificial intelligence. True AI has yet to be achieved.

But now it goes even further than that. Wilson, along with Sainyam Galhotra, Yuriy Brun, and Alexandra Meliou, all through the University of Massachusetts, Amherst claim that they can, “test these algorithms methodically enough from afar to sniff out inherent prejudices.”

That’s the idea behind Themis. It’s a freely available bit of code that can mimic the process of entering thousands of loan applications or criminal records into a given website or app. By changing specific variables methodically–whether it be race, gender, or something far more abstract–it can spot[create] patterns of prejudice in any web form.

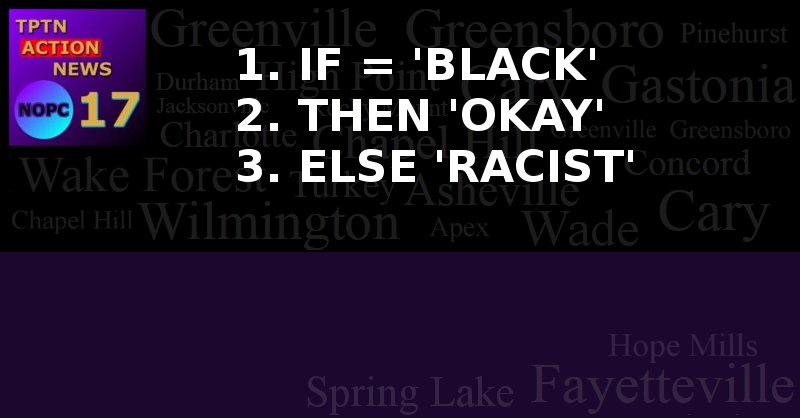

In plain language they simply forcefully introduce race into the program, count the number of Blacks in comparison to the other races and then if the Black race is found to be slighted in any way the programmer’s code is deemed to be racist. This flies fully in the face of Martin Luther King’s admonition to judge by content of ‘character’ and not by color.

Any attorney would tell you to not introduce such a thing into, or in any way even near your company program code because you would then be opening yourself up to all sorts of liability issues. If your code does not use race as a factor then your code is not racist…period.